I’m currently finishing Matt Crawford’s third book, Why We Drive. His first book, Shop Class As Soulcraft arrived just when I was transitioning out of years of academic classrooms into technology teaching and it helped me reframe my understanding of my manual skills that are generally seen as less-than by the education system I work in.

Why We Drive looks at how we’re automating human agency under the veil of safety, ease of use and efficiency. But in examining the work of the technology companies providing this technology, Crawford ends up uncovering a nasty new version of voracious surveillance capitalism at work in the background.

Crawford comes at this from the point of view of driving because Google and the other attention merchants are very excited about moving us to driverless cars in the near future, and Crawford is skeptical about their motivations for doing this. From Shop Class As Soul Craft to The World Beyond Your Head and now in Why We Drive, Crawford has always advocated for human agency over automation, especially when that automation is designed to simplify and ease life to the point where it’s obvious we’re heading for a Wall-E like future of indolent incompetence in the caring embrace of an all-powerful corporation.

Situated intelligence is a recurring theme in Crawford’s thinking and he sees it as one of the pinnacles of human achievement. He makes strong arguments for why surveillance capitalists aren’t remotely interested in human agency and the situated intelligence it leads to, and he fears that this will ultimately damage human capacity. Among the many examples he gives is that of London taxi drivers:

|

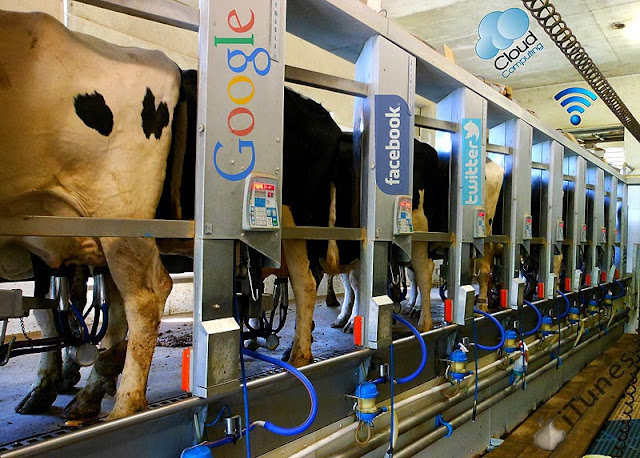

‘Free’ means something different in surveillance

capitalism. Note the accessibility and simplicity,

a common idea in edtech marketing, because

learning digital tools doesn’t mean understanding

them, it means learning to consume on them. |

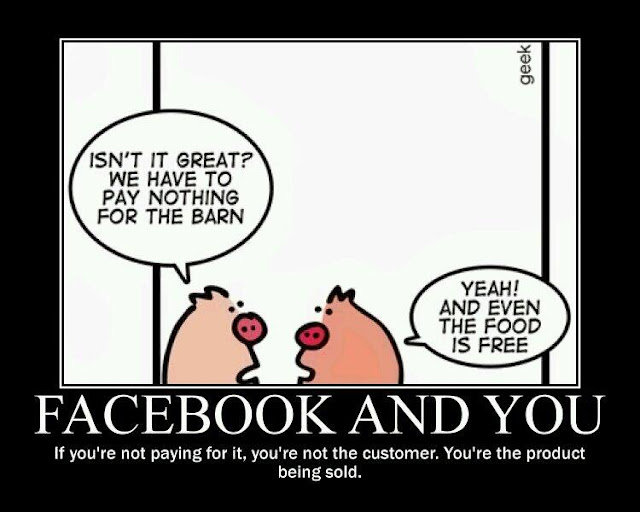

I can’t help but see parallels with educational technology. We recently had another technology committee meeting where it was decided that once again we would buy hundreds of Google Chromebooks: “simple yet powerful devices with built-in accessibility and security features to deepen classroom connections and keep user information safe” Notice the hard sell on safety and security, like something out of Tesla and Uber’s misinformation marketing plans. The reason your student data is safe is because Google is very protective of ‘its’ data, and make no mistake, once you’re in Google’s ecosystem, your data IS their data.

These plug in to our ‘walled garden’ of Google Education products that keep iterating to do more and more for students and staff until they’re sending emails no human wrote and generating digital media automatically, all while saving every aspect of user input. Board IT and myself argued for a diversity of technology in order to meet more advanced digital learning needs, but advanced digital learning isn’t what we’re about, even though we’re a school. Digital tools now mean ease of use and cost savings (though this is questionable), they are no longer a tool for learning as they increasingly do the work for us.

As Crawford suggests, the intention of these tools is ultimately to automate our actions and direct us towards a purchase. That fact that we’re dropping millions of dollars in public funding at best familiarizing students with their future consumer relationship with technology is astonishing. As big tech gains access to increasingly personal information, like your geographic location, patterns of movement and even how you ergonomically interact with a machine, personal data gets harder to anonymize. The push is to get into all aspects of life in order to collect data that will serve the core business…

Crawford offers example after example of technology companies that offer ease of use and accessibility under the unassailable blanket of safety, ease of use and efficiency. This too has crept into education technology, where instead of taking personal responsibility for our use of technology we surrender that critical effort to the inscrutable powers that be. One of the intentions of the new normal is to produce people that do not question authority because a remote, cloud based authority is unquestionable.

From Shop Class forward Crawford has been critical of the ‘peculiarly chancy and fluid‘ character of management thinking, which also falls easily into the safety/automation argument being provided by the richest multi-nationals in the world. That system managers fit in well with system think shouldn’t be a surprise, but for anyone left in the education system who is still trying to focus on developing situated intelligence, it’s a completely contrary and damaging evolution. I shouldn’t be surprised that the people running things want to cut out the complexity in favour of safety and ease of use (even if that isn’t what’s really being offered), but any teacher thus focused has lost the plot.

There are still questions around how student data is used by Google. Crawford highlights how location data can’t be anonymized (it’s like a finger print and very individually specific), so even if your corporate overlord isn’t putting a name on a data set, they can still tell whose data it is. Location data is a very rich vein of personal information to tap if you’re an advertising company, which is why Google is interested in developing self-driving cars and getting everyone into convenient maps. Unless you’re feeding their data gathering system they don’t lift a finger.

Crawford goes so far as to describe this as a new kind of colonialism that we’re all under the yoke of, but passive analysis isn’t the end goal. He shows experiments like Pokemon Go (created by Google) as a test in active manipulation. The goal isn’t to create a new level of advertisement based on predictive algorithms, it’s to build an adaptive system that can sublty manipulate user responses without them even realizing it. In doing so he also explains why so many people are feeling so disenfranchised and are making otherwise inexplicable, populist political decisions:

Google’s mapping projects are situated in colonialist intent (empires make maps in order to control remote regions). By mapping the world and giving everyone easy access to everywhere, local knowledge becomes worthless and a remote standard of control becomes a possibility. Smart cities are shown in this light. The language around all ‘smart’ initiatives from edtech to smart cities all follow the same ease of use/efficiency/safety/organizational marketing language. This language is unassailable (are you saying you don’t want efficiency, safety, ease of use and organisation?) This thinking is so ubiquitous that even trying to think beyond it is becoming impossible. Though tech-marketing suggests that ease/efficiency/safety is the intent, the actual point is data collection to feed emerging markets of predictive and influencer marketing; digital marketing is Big Brother. Orwell was right, but he couldn’t imagine a greater power than centralized government in the Twentieth Century. The Twenty-First Century produced the first world governments, but they are corporations driven by technology enabled mass data gathering that are neither by nor for the people.

There is no way out of the endless cage Google is constructing. Self-driving cars and driving itself are the mechanism by which Crawford uncovers an unflattering and insidious form of capitalism that has already damaged our political landscape and looks set to damage human agency for decades to come under the guise of safety, efficiency, ease of use and security.

Any criticism of this is in violation of the cartel that supports and is supported by it and results in a sense of alienation that leads to anger and populist resentment. Governments, including public education, can’t tap into this ‘free’ technology fast enough, but of course it isn’t free at all, and what we’re giving up in the pursuit of easy, efficient and safe is at odds with the freedom of action it takes from us.

“While it is impossible to imagine surveillance capitalism without the digital, it is easy to imagine the digital without surveillance capitalism. The point cannot be emphasised enough: surveillance capitalism is not technology. Digital technologies can take many forms and have many effects, depending upon the social and economic logics that bring them to life. Surveillance capitalism relies on algorithms and sensors, machine intelligence and platforms, but it is not the same as any of those.”

There was a time when digital technology wasn’t being driven by advertising. The early internet wasn’t the orderly, safe and sanitized place it is becoming, but it was a powerful change in how we worked together as a species. I don’t know that I buy in to all of Matt’s arguments in Why We Drive, but his fundamental belief that we should be using technology to enhance human ability rather than replacing it is something I can’t help but agree with, and any teacher focused on pedagogy should feel the same way.

Why We Drive is the latest in a series of books and media that is, after years of political and psychological abuse, looking to provide society with a white blood cell response to surveillance capitalism. Rather than taking some of the most powerful technology we’ve ever created and aiming it at making a few psychopaths rich while enfeebling everyone else, my great hope is that our understanding of this nasty process will give us the ability to take back control of digital technologies and develop them as tools to enhance human capabilities instead. We need to do that sooner than later because the next century is going to decide the viability of the human race for the long term and we need to get past this greed and short sightedness in order to focus on the bigger problems that face us. We could start in education by taking back responsibility for how we use and teach our children about digital technologies.

***

I’ve long been raging against the corporate invasion of educational technology:

from Blogger https://ift.tt/2J5nx2D

via IFTTT