I’m having one of those intersectional moments where my recent work in AI, coding and cybersecurity have me thinking about ways we can fix the worst parts of our digital adolescence. Media like the tweet below are wearing everyone down, but I think this is a digital media problem that digital media can help resolve:

Together, we can reignite the heart & spirit of our youth.— Stephen Lecce (@Sflecce) February 15, 2020

In this case an elected official is claiming to support children with special needs while at the same time doing the doing the opposite behind the scenes, even going so far as to ignore signed contracts and cancelling support. As I watched this misinformation I wondered why the digital system delivering it (Twitter in this case), couldn’t include links and information to clarify what I’m watching. Doing so would help users understand when they are being misled. Can you imagine a digital media ecosystem that actually encourages truth and accuracy instead of what we have now?

From a data management point of view, rhetoric and political spin should bump up against a scientific analysis of fact based initially on volume of data. Facts tend to have more data behind them (proving things takes time and information). Attacking this as a big-data computer science project, statements made by politicians could be corroborated by connecting to supporting digital information in real time. I dream of the day when I’m watching a politician’s speech live online on any browser (this should be baked into every browser) while seeing an AI driven analytical tool that is leveraging the digital sea of information we live in to validate what is being said.

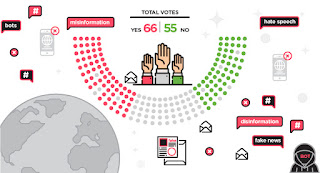

This information enrichment would do two things. Firstly, it would create a truth-tendency over time metric that would allow voters to more accurately assess the accuracy of what politicians, news outlets and even each other are saying – a kind of digital reputation. Secondly, having an impartial analysis of social activity in real time would mitigate and highlight fake news and help social media to resolve its terrible handling of misinformation.

There are layers and layers to digital misinformation. As we’ve moved from lower bandwidth mediums like text through still images to video, misinformation is keeping up, often under the guise of marketing. You can’t trust anything you see online these days:

It’s a new form of media literacy that most people are unaware of. There are plugins attempting to battle photoshopped images and videos that should help stem that tide of misinformation. Movement on this is fast because parsing image and video data is a more mathematically biased problem, but intentional misinformation either created or shared is also something machine learning systems can get better and better at identifying as they learn the peculiarities of why humans lie to each other.

In the case of something like Vaughan Working Families, a fake organization designed to spread misinformation by wealthy government supporters, the misinformation was fairly easily identifiable by looking into the group’s history (there is none). That lack of data is a great starting point in training an AI big data analysis system in live response to misinformation – the truth always weighs more because of the evidence needed to support it.

In the case of something like Vaughan Working Families, a fake organization designed to spread misinformation by wealthy government supporters, the misinformation was fairly easily identifiable by looking into the group’s history (there is none). That lack of data is a great starting point in training an AI big data analysis system in live response to misinformation – the truth always weighs more because of the evidence needed to support it.

We do IBM Watson chatbot coding in my grade 10 computer engineering class, and it is interesting to watch how the AI core picks up information and learns it. As it collects more and more information, and supported by students teaching it parameters, it very quickly picks up the gist of even complex, non-linear information. Based on that experience, I suspect a browser overlay that offers a pop up of accurate, related information in real time is now possible.

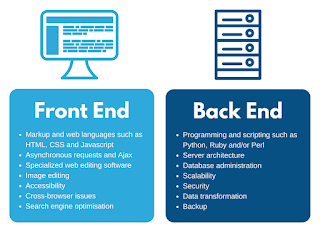

In software you have the front end that faces the user and the back end that does the heavy lifting with data. In the cloud-based world we live in, with people sharing massive amounts of data online, an unbiased, ungameable, transparent AI driven fake news overlay would go miles in restoring the terrible history Facebook, Google, Microsoft and the rest have in interfering with democracy. This shouldn’t be something squirrelled away and only available to journalists. It should be a technical requirement for any browser.

In software you have the front end that faces the user and the back end that does the heavy lifting with data. In the cloud-based world we live in, with people sharing massive amounts of data online, an unbiased, ungameable, transparent AI driven fake news overlay would go miles in restoring the terrible history Facebook, Google, Microsoft and the rest have in interfering with democracy. This shouldn’t be something squirrelled away and only available to journalists. It should be a technical requirement for any browser.

With that unblinking eye watching the dodgy humans, not only would politicians be held to a higher standard, but so would everyone. Those quiet types who happily retweet and share false information are complicit in this information virus. If your Twitter account ends up with a red 17% accuracy tag because you regularly create and share misinformation, then I’d hope it results in less people being interested in following you, though I don’t personally have a lot of faith in people to do even that. Left to our own devices, or worse, chasing the money, we’ve made a mess or things by letting digital conglomerates disrupt institutions that took years to evolve into pillars of civil society. It’s time to demand that they use the same technologies they are leveraging now to fix it.

We’re obviously either too lazy and/or self interested to make a point of fact checking our social media use. If we’re all on there sharing information, we should all make our best effort in sharing it accurately. This could help make that happen. It would also go a long way toward preventing the the cyber-crime epidemic we live in which thrives on this kind of hyperbole and irrational response.

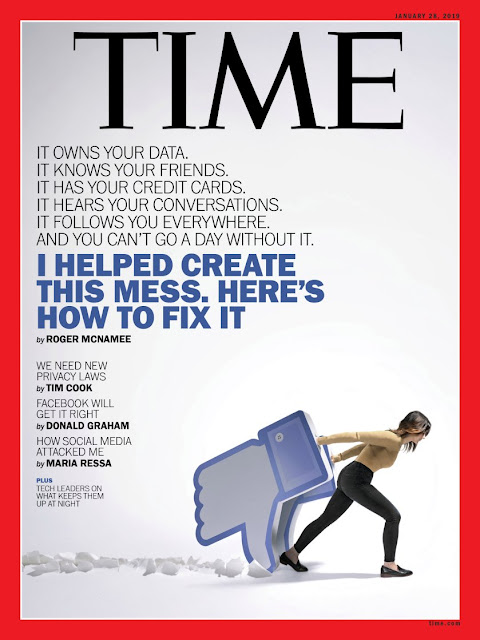

There have been some attempts by charitable organizations and students to create online fact checkers, but the browser creators (Google, Microsoft, Firefox, etc), and social media giants (Facebook, Twitter, etc) don’t seem to be the ones doing it, even though they’ve gotten rich from this misinformation and damaged our ability to govern ourselves as a result. Law can’t keep up with our technological adolescence and the data avalanche it has produced, but the technology itself is more than able.

We increasingly depend on people, often amateurs with little or no funding, to do our online fact checking, but the sheer volume of information, especially when driven by automated processes like bots, makes that unscalable. This is something that professional journalists used to do (at least I hope they used to do it, because not many are doing it now). However, the financial pressure on those institutions due to digital disruption means they are now more than happy to take inaccurate and misleading information and share it if it makes them somewhat relevant again. The only way to address this situation is by leveraging the same technology that caused it in the first place.

We increasingly depend on people, often amateurs with little or no funding, to do our online fact checking, but the sheer volume of information, especially when driven by automated processes like bots, makes that unscalable. This is something that professional journalists used to do (at least I hope they used to do it, because not many are doing it now). However, the financial pressure on those institutions due to digital disruption means they are now more than happy to take inaccurate and misleading information and share it if it makes them somewhat relevant again. The only way to address this situation is by leveraging the same technology that caused it in the first place.

What do you say tech billionaires? Could we redesign our digital media browsing so it encourages accurate information rather than making it irrelevant? You might have to actually put some financial support into this since you’ve effectively dismantled many of the systems that used to protect the public from it.

from Blogger https://ift.tt/37thHil

via IFTTT